Compute clusters for Debian development and building final report

Summary

-

The goal of this project was intended to have Eucalyptus cloud to

support ARM images so it allows Debian developers and users to be able

to use such facility for taks such package building, software

development (ie. Android) under a Debian pre-set image,

software testing many others.

What was expected to have at the end is a modified version of Eucalyptus

Cloud that supports ARM images on the first place. To date this goal has

been reached but is not complete, read production-ready. Extensive test

needs to be done. Besides that we have another goal which is to get

Debian community to use this new extended tool.

Project overview

-

Eucalyptus is a hybrid cloud computing platform which has a Open Source

(FLOSS) version. It s targetted to be used for PaaS (Platform as a

Service), IaaS (Infrastructure as a Service) and other distribution

models. It can be used also for cloud management. Given that it has

implemented the EC2, for computing, and the S3, for storage, API, it s

compatible with existing public clouds such Amazon EC2. Currently it

supports running i386 and amd64 images or NC (Node Controllers) in

Eucalyptus naming.

Eucalyptus is a complex piece of software. It s architechture it s

modular composed by five components. Cloud Controller (CLC), Walrus (W),

Storage Controller (SC), Cluster Controller (CC) and Node Controller

(NC). The first three are written in Java and the remaining are written

in C. Our project modifications was targetted to the NC component,

altough there is a remaining task for hacking the UI to allow setting

the arch of the uploaded NC image instance.

The Node Controller is in charge of setting the proper information for

Eucalyptus to be able to run virtualized images. Eucalyptus uses XEN and

KVM hyphervisor to handle internal virtualization subsystem, and

Libvirt, which is an abstraction of the existing virtualization

libraries, for program interfacing.

Having that in mind we are back to our project. For our project to be

sucessful we had various tasks to do. Beginning with understanding such

complex piece of software, followed by hacking the requiered bits and

later integrate the work so it results in a useful tool. Our approach to

the project was then inline with such description.

The project s history

I started my participation in GSoC approaching Steffen to discuss about

what was expected, or more accurately, what was in his mind. We

exchanged emails previous to my application and that resulted on my

application being submitted. From the beginning I should tell that I

wasn t much clear on the final product of the project, however we

refined ideas and goals during the weeks following to the official

begining of the project. What was clear for me, after all, is that for

this to see the light and gain adoption Eucalyptus code needed to be

dealt with.

Our first task was review ARM image creation from scratch, using the

work done by Aurelien and Dominique in previous GSoC. I ve managed to

get an updated ARM image running under qemu-system-arm. The issues

presented in the past were almost unexistant now that the versatile

kernel is official. You can see this on my first report.

After that being done and sometimes in parallel my main goal was then understand

the internals of the Eucalyptus software in order to figure out it s

feasibility, where do we need to extend and how big the task is, we

didn t knew upfront. From the beginning of the project Steffen was kind

to introduce me to the Eucalyptus developers and that have resulted in a

good outcome for Debian, IMHO, to date.

Understanding Eucalyptus internals was quite a fun task to say the less. As you

can see on the first part of the report Eucalyptus is modularized and

the component I was expected to work with was the NC (Node

Controller). Given that I isolated my work focus, I started to focus on

learning the internals about that component.

Node Controller as described is in charge of talking with

virtualization hyphervisors in order to arrange things to run guest

image instances. The module has basically one main component: handler.c,

in charge to talk to the appropiate hyphervisor and there are

extensions (think of OOP polymorphism but acknoledge it s plain C)

that interact with KVM or XEN.

I figured that if qemu-kvm is ran in Hyphervisor mode we can manage to

run an ARM image with qemu-system-arm. Given that Eucalyptus has

interaction with KVM hyphervisor in place, this answered the question of

the project s feasibility. First green light on.

>From this point the scope reduced to interacting with the KVM handler

(node/handler_kvm.c) and extend it to support running an image that is

not amd64 or i386.

NC makes use of libvirt to abstract interaction with Hyphervisors. So,

the next phase was learning about it s API and figure what s needed to

be modified next. Libvirt uses a XML file for setting Domain (in

libvirt s naming) definitions. So, Eucalyptus provides a Perl wrapper to

generate this file on runtime and allow the NC to invoke libvirt s API

to run the instance. Next task then, was adapt such script to support

ARM arch. The current script is taiolred for amd64 and i383. I worked on

that front and managed to get a script prototype, that can later be

improved and support more arch s.

Generating an adequate Libvirt s XML Domain definition file for ARM can

be a heroic task. There are many things to have in mind given the

diversity of the ARM processor and vendors. I focused on the versatile

flavour given that I was going to test the image I built on the first

place and it ran fine under qemu-system-arm.

The Perl script wrapper then was adapted for such configuration and it

can be tested independently by issuing the following command:

$ tools/gen_kvm_libvirt_xml arch arm

The arch parameter is what I implemented and it s intended to be

called from the kvm handler on instance creation. With the extensibility

in mind I ve created a hash to associate the arch with their

corresponding emulator.

our %arches = (

amd64 => /usr/bin/kvm ,

arm => /usr/bin/qemu-system-arm ,

);

Added the arch parameter to the GetOptions() function and used

conditions to tell whether the user is looking for a particular arch,

arm in our case. The most important parts to be considered are the

, and entries.

hvm

$local_kvm

There s also and that require to be tailored for arm.

root=0800

As output the script generates an XML template that can be adapted to

your needs and it could be used and tested with tools like Libvirt s

virsh. So that, it can be useful independently of Eucalyptus. I managed

to get to this point before the midterm evaluation.

Now that we had the XML wrapper almost done, the next task was to make

the handler call pass the arch as an argument to the script, so the

image is loaded with the proper settings and we are able to run it.

Eucalyptus doesn t have a arch field for NC s instances. So, after approaching

Eucalyptus developers, with whom we had already being interacting, I

settled to my proposal of extending the ncInstance struct with an arch

field (util/data.h). The ncInstance_t struct stores the metadata for the

instance being created. It s used for storing runtime data as well as

network configuration and more. It was indeed the right place to add a

new field. I did so by creating the archId field.

Now I needed to make sure the arch information is stored and later used on the

libvirt s call.

typedef struct ncInstance_t

char instanceId[CHAR_BUFFER_SIZE];

char imageId[CHAR_BUFFER_SIZE];

char imageURL[CHAR_BUFFER_SIZE];

char kernelId[CHAR_BUFFER_SIZE];

char kernelURL[CHAR_BUFFER_SIZE];

char ramdiskId[CHAR_BUFFER_SIZE];

char ramdiskURL[CHAR_BUFFER_SIZE];

char reservationId[CHAR_BUFFER_SIZE];

char userId[CHAR_BUFFER_SIZE];

char archId[CHAR_BUFFER_SIZE];

int retries;

/* state as reported to CC & CLC */

char stateName[CHAR_BUFFER_SIZE]; /* as string */

int stateCode; /* as int */

/* state as NC thinks of it */

instance_states state;

char keyName[CHAR_BUFFER_SIZE*4];

char privateDnsName[CHAR_BUFFER_SIZE];

char dnsName[CHAR_BUFFER_SIZE];

int launchTime; // timestamp of RunInstances request arrival

int bootTime; // timestamp of STAGING->BOOTING transition

int terminationTime; // timestamp of when resources are released (->TEARDOWN transition)

virtualMachine params;

netConfig ncnet;

pthread_t tcb;

/* passed into NC via runInstances for safekeeping */

char userData[CHAR_BUFFER_SIZE*10];

char launchIndex[CHAR_BUFFER_SIZE];

char groupNames[EUCA_MAX_GROUPS][CHAR_BUFFER_SIZE];

int groupNamesSize;

/* updated by NC upon Attach/DetachVolume */

ncVolume volumes[EUCA_MAX_VOLUMES];

int volumesSize;

ncInstance;

With that in place what was left was modifying the functions that

store/update the ncInstance data, and also the libvirt s function that

calls the gen_kvm_libvirt_xml script. I ve modified the following files:

- util/data.c

- node/handlers_kvm.c

- node/handlers. c,h

- node/test.c

- node/client-marshall-adb.c

Most important the allocate_instance() function that is in charge of setting up

the instance metadata a prepare it to pass it to the handler, then

hyphervisor trough Libvirt s API. The function now has a new archId

parameter as well, to keep coherence with the field name. It also

handles whether the field is set or not. We (Eucalyptus developers and

I) haven t settled wether to initialize this field with a default value

or not. I d stepped up and initialized with a NULL value for this string

type var.

if (archId != NULL)

strncpy(inst->archId, archId, CHAR_BUFFER_SIZE);

Stores the value only if it s passed to the function. This is essential to

don t break existing functionality and keep consistency for later releases.

With this we almost finished the part of extending Eucalyptus to support

arm images. It took quite long to get to that point. Next step: test.

As I mentioned in my previous report the current Eucalyptus packaging

has issues. From the beginning I ve approached the pkg-eucalyptus team,

who were very hepful. So I myself set me up and joined the pkg team with

commit access to the repo. Even the GSoC is not intended for packaging

labour, I needed to clear things on that side because what we want is

adoption so anyone, say a DSA or a user, could setup a cloud that has

support for running arm instances under amd64 arch.

Over the weeks between mid-term evaluation and past week I ve worked on

that front. Results were good, as you can see in the pkg-eucalyptus

mailing list and SVN repo.

Eucalyptus has a couple of issues that because of it s nature and complexity

triggered build errors. Those issues came from the Java side of the project and

few form the C-side. The C-part was a problem with AXIS2 Jar used to generate

stubs for later inclusion on build-time. There s no definitive solution to date

because one of the Makefile on gatherlog/Makefile issues a subshell call that

doesn t catches the AXIS_HOME environment variable. I ve worked around this

by defining it as a session env var from .zshrc. The other problem is related

to the Java libary versioning and most likely (we had to investigate further)

to groovy versioning.

As I explained Eucalyptus has 3 components written in Java. The Java code uses

extensively the AXIS2 library for implementing webservices calls, the groovy

language for JVM, and many other Java libaries. Eucalyptus open source version

ships both online and offline versions. The difference is basically that

the offline version ships the Java libaries JAR files inside the source code,

so you can build with all required deps.

Our former packaging used a file to list which Java libraries the package

depends on and it symlinked them using system wide installed packages. This

rather than helping out triggered a lot of problems I explaing with more detail

on the pkg-eucalyptus ml. I worked around this by telling the debian/rules

not to use such file to symlink and instead use the existing ones from

the source. The last weekend I managed to get to that point and built

sucessfully the software under Debian. I ve done that in order to have a

complete build-cycle for my changes, and then test. In fact today I ve

spotted and fixed a couple of bugs there.

What s available now

To date we have a Eucalyptus branch where my patches are sent. The level of

extension is nearly complete, more testing needed. One missing bit is to

change the UI so that the user selects which arch her image is and that

value is passed to the corresponding functions on the Node Controller

component.

We also have an ARM image that can be tested and later automated. I ve

recently learned about David Went s VMBuilder repo that extends Ubuntu s

vmbuilder to support image creationg. I might talk to him to adapt the tool

in order to support creation of arm images and use it to upload to an

Eucalyptus cloud.

I ve also created a wiki page with different bits on which I was working on.

I ve yet need to craft it to be more educational. I guess I m going to use

this report as a source :)

We also had more strong cooperation with the Eucalyptus project and

involved their developers on the packaging and this project, which is

something I consider a great outcome for this project and GSoC goals:

to attract more people to contribute to FLOSS. I ve also been contacted by

people from the Panda Project and we might cooperate also.

Future work

I plan to keep working on the project regardless the GSoC ends. Our goal

as Debian could be integrating this into the Eucalyptus upstream

branch. We have good relations with them so expect news from that side.

I also plan to keep working with the Eucalyptus team and related

software in Debian, given that I m familiarized with the tools and

projects.

I m also planning to advocate SoC for Debian in my faculty. To date we

had already 4 students, IIRC, that have participated in the past.

Project challenges and lessons

During the project I faced many challenges both in the personal side

and in the technical one. This part is a bit personal but I expect we

learn from this.

First challenge was the where do I sit problem. My project was

particular, indeed quite different from the others on it s nature. I had

to work on a software that is not Debian s and then come and say Hey we

have this nice tool for you, come test it! . So, indeed, my focus was on

the understanding of such tool, Eucalyptus, and not much on looking back

at Debian because I didn t feel I had something to advertise yet and

indeed I got most valuable/useful feedback from Eucalyptus developers

rather than Debian s, which for the case is OK IMHO. I had great

mentoring. This situation probably looked a bit of isolation from my

side, from Debian s POV, but it was not intended it s just how it needed

to be IMHO. I felt that I wasn t contributing directly to the

project. I ve spoke about such thing with Steffen a couple of times. I d

like to say that it was also my concern but I probably failed on

communicating this, second challenge.

Third challenge was more technical and related to the first one. Since I

was not writing any code for a Debian native package/program but instead for

other project I faced the situation of where to publish my

contributions. Eucalyptus had some issues on managing their FLOSS repo

and the current development one is outdated comparing to the

-src-offline 2.0.3 release. The project didn t fit pkg-eucalyptus repo

neither, even we thought to branch the existing trunk and ship my

contribs as quilt patches, but it involved a lot of more work and burn

on reworking something that has issues now. Later on time I branched the

bzr eucalyptus-devel Launchpad repo and synced there with the

src-offline code. I was slow to react on thing I can say.

Fourth challenge was also technical and still dealing with. I was looking to

setup a machine to set a cloud and test my branch. The Dean of my faculty

kindly offered a machine but I ve never managed to get things arranged

with the technical team. Steffen and I discussed about this and evaluated

options, at the end we settled on pushing adoption rather than showcasing it.

Since for adoption we need a use case, I ve spend some SoC money on hardware

for doing this. I should have news this week on that side :)

Finally, I d like to thank everyone on the Debian SoC team for this

opportunity to participate. I like to thank Steffen for his effort on

arranging things for me, Graziano from Eucalyptus for his advice on

working with the software code. The different people from Eucalyptus I

interacted with and showed interest on the project s sucess. My

classmates and teachers from the Computer Science School at UCSP in

Arequipa-Peru, friends from the computing community in Peru SPC and

finally my family. Thank you, I learned a lot, specially on the personal

side. As Randy Pausch put: The brick walls are there to give us a

chance to show how badly we want something.

Resources

Please see the Wiki page[1] for any reference to the project s resources.

1- http://wiki.debian.org/SummerOfCode2011/BuildWithEucalyptus/ProjectLog

Best regards,

Rudy

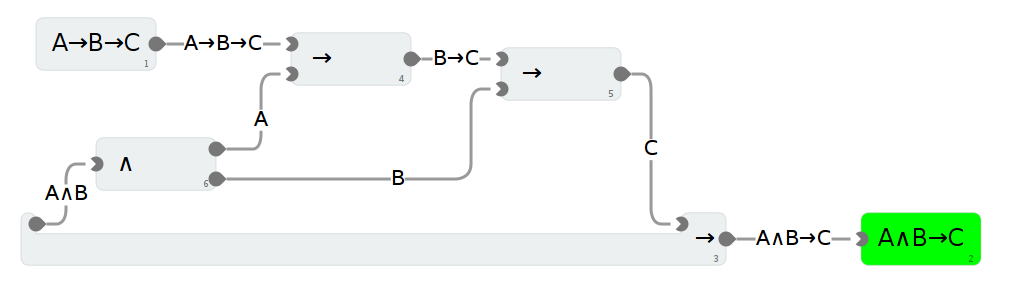

In a few weeks, I will have the opportunity to offer a weekend workshop to selected and motivated high school students1 to a topic of my choice. My idea is to tell them something about logic, proofs, and the joy of searching and finding proofs, and the gratification of irrevocable truths.

While proving things on paper is already quite nice, it is much more fun to use an interactive theorem prover, such as Isabelle, Coq or Agda: You get immediate feedback, you can experiment and play around if you are stuck, and you get lots of small successes. Someone2 once called interactive theorem proving the worlds most geekiest videogame .

Unfortunately, I don t think one can get high school students without any prior knowledge in logic, or programming, or fancy mathematical symbols, to do something meaningful with a system like Isabelle, so I need something that is (much) easier to use. I always had this idea in the back of my head that proving is not so much about writing text (as in normally written proofs) or programs (as in Agda) or labeled statements (as in Hilbert-style proofs), but rather something involving facts that I have proven so far floating around freely, and way to combine these facts to new facts, without the need to name them, or put them in a particular order or sequence. In a way, I m looking for labVIEW wrestled through the Curry-Horward-isomorphism. Something like this:

In a few weeks, I will have the opportunity to offer a weekend workshop to selected and motivated high school students1 to a topic of my choice. My idea is to tell them something about logic, proofs, and the joy of searching and finding proofs, and the gratification of irrevocable truths.

While proving things on paper is already quite nice, it is much more fun to use an interactive theorem prover, such as Isabelle, Coq or Agda: You get immediate feedback, you can experiment and play around if you are stuck, and you get lots of small successes. Someone2 once called interactive theorem proving the worlds most geekiest videogame .

Unfortunately, I don t think one can get high school students without any prior knowledge in logic, or programming, or fancy mathematical symbols, to do something meaningful with a system like Isabelle, so I need something that is (much) easier to use. I always had this idea in the back of my head that proving is not so much about writing text (as in normally written proofs) or programs (as in Agda) or labeled statements (as in Hilbert-style proofs), but rather something involving facts that I have proven so far floating around freely, and way to combine these facts to new facts, without the need to name them, or put them in a particular order or sequence. In a way, I m looking for labVIEW wrestled through the Curry-Horward-isomorphism. Something like this:

Who are you?

My name is Francesca and I'm totally flattered by your intro. The fearless warrior part may be a bit exaggerated, though.

What have you done and what are you currently working on in FLOSS world?

I've been a Debian contributor since late 2009. My journey in Debian has touched several non-coding areas: from translation to publicity, from videoteam to www. I've been one of the www.debian.org webmasters for a while, a press officer for the Project as well as an editor for DPN. I've dabbled a bit in font packaging, and nowadays I'm mostly working as a Front Desk member.

Setup of your main machine?

Wow, that's an intimate question! Lenovo Thinkpad, Debian testing.

Describe your current most memorable situation as FLOSS member?

Oh, there are a few. One awesome, tiring and very satisfying moment was during the release of Squeeze: I was member of the publicity and the www teams at the time, and we had to pull a 10 hours of team work to put everything in place. It was terrible and exciting at the same time. I shudder to think at the amount of work required from ftpmaster and release team during the release. Another awesome moment was my first Debconf: I was so overwhelmed by the sense of belonging in finally meeting all these people I've been worked remotely for so long, and embarassed by my poor English skills, and overall happy for just being there... If you are a Debian contributor I really encourage you to participate to Debian events, be they small and local or as big as DebConf: it really is like finally meeting family.

Some memorable moments from Debian conferences?

During DC11, the late nights with the "corridor cabal" in the hotel, chatting about everything. A group expedition to watch shooting stars in the middle of nowhere, during DC13. And a very memorable videoteam session: it was my first time directing and everything that could go wrong, went wrong (including the speaker deciding to take a walk outside the room, to demonstrate something, out of the cameras range). It was a disaster, but also fun: at the end of it, all the video crew was literally in stitches. But there are many awesome moments, almost too many to recall. Each conference is precious on that regard: for me the socializing part is extremely important, it's what cements relationships and help remote work go smoothly, and gives you motivation to volunteer in tasks that sometimes are not exactly fun.

You are known as Front Desk member for DebConf's - what work does it occupy and why do you enjoy doing it?

I'm not really a member of the team: just one of Nattie's minions!

You had been also part of DebConf Video team - care to share insights

into video team work and benefits it provides to Debian Project?

The video team work is extremely important: it makes possible for people not attending to follow the conference, providing both live streaming and recording of all talks. I may be biased, but I think that DebConf video coverage and the high quality of the final recordings are unrivaled among FLOSS conferences - especially since it's all volunteer work and most of us aren't professional in the field. During the conference we take shifts in filming the various talks - for each talk we need approximately 4 volunteers: two camera operators, a sound mixer and the director. After the recording, comes the boring part: reviewing, cutting and sometimes editing the videos. It's a long process and during the conference, you can sometimes spot the videoteam members doing it at night in the hacklab, exhausted after a full day of filming. And then, the videos are finally ready to be uploaded, for your viewing pleasure. During the last years this process has become faster thanks to the commitment of many volunteers, so that now you have to wait only few days, sometimes a week, after the end of the conference to be able to watch the videos. I personally love to contribute to the videoteam: you get to play with all that awesome gear and you actually make a difference for all the people who cannot attend in person.

You are also non-packaging Debian Developer - how does that feel like?

Feels awesome! The mere fact that the Debian Project decided - in 2009 via a GR - to recognize the many volunteers who contribute without doing packaging work is a great show of inclusiveness, in my opinion. In a big project like Debian just packaging software is not enough: the final result relies heavily on translators, sysadmins, webmasters, publicity people, event organizers and volunteers, graphic artists, etc. It's only fair that these contributions are deemed as valuable as the packaging, and to give an official status to those people. I was one of the firsts non-uploading DD, four years ago, and for a long time it was just really an handful of us. In the last year I've seen many others applying for the role and that makes me really happy: it means that finally the contributors have realized that they deserve to be an official part of Debian and to have "citizenship rights" in the project.

You were the leading energy on Debian's diversity statement - what gave

you the energy to drive into it?

It seemed the logical conclusion of the extremely important work that Debian Women had done in the past. When I first joined Debian, in 2009, as a contributor, I was really surprised to find a friendly community and to not be discriminated on account of my gender or my lack of coding skills. I may have been just lucky, landing in particularly friendly teams, but my impression is that the project has been slowly but unequivocally changed by the work of Debian Women, who raised first the need for inclusiveness and the awareness about the gender problem in Debian. I don't remember exactly how I stumbled upon the fact that Debian didn't have a Diversity Statement, but at first I was very surprised by it. I asked zack (Stefano Zacchiroli), who was DPL at the time, and he encouraged me to start a public discussion about it, sending out a draft - and helped me all the way along the process. It took some back and forth in the debian-project mailing list, but the only thing needed was actually just someone to start the process and try to poke the discussion when it stalled - the main blocker was actually about the wording of the statement. I learned a great deal from that experience, and I think it changed completely my approach in things like online discussions and general communication within the project. At the end of the day, what I took from that is a deep respect for who participated and the realization that constructive criticism does require certainly a lot of work for all parts involved, but can happen. As for the statement in itself: these things are as good as you keep them alive with best practices, but I think that are better stated explicitly rather than being left unsaid.

You are involved also with another Front Desk, the Debian's one which is involved with Debian's New Members process - what are tasks of that FD

and how rewarding is the work on it?

The Debian Front Desk is the team that runs the New Members process: we receive the applications, we assign the applicant a manager, and we verify the final report. In the last years the workflow has been simplified a lot by the re-design of the nm.debian.org website, but it's important to keep things running smoothly so that applicants don't have too lenghty processes or to wait too much before being assigned a manager. I've been doing it for a less more than a month, but it's really satisfying to usher people toward DDship! So this is how I feel everytime I send a report over to DAM for an applicant to be accepted as new Debian Developer:

Who are you?

My name is Francesca and I'm totally flattered by your intro. The fearless warrior part may be a bit exaggerated, though.

What have you done and what are you currently working on in FLOSS world?

I've been a Debian contributor since late 2009. My journey in Debian has touched several non-coding areas: from translation to publicity, from videoteam to www. I've been one of the www.debian.org webmasters for a while, a press officer for the Project as well as an editor for DPN. I've dabbled a bit in font packaging, and nowadays I'm mostly working as a Front Desk member.

Setup of your main machine?

Wow, that's an intimate question! Lenovo Thinkpad, Debian testing.

Describe your current most memorable situation as FLOSS member?

Oh, there are a few. One awesome, tiring and very satisfying moment was during the release of Squeeze: I was member of the publicity and the www teams at the time, and we had to pull a 10 hours of team work to put everything in place. It was terrible and exciting at the same time. I shudder to think at the amount of work required from ftpmaster and release team during the release. Another awesome moment was my first Debconf: I was so overwhelmed by the sense of belonging in finally meeting all these people I've been worked remotely for so long, and embarassed by my poor English skills, and overall happy for just being there... If you are a Debian contributor I really encourage you to participate to Debian events, be they small and local or as big as DebConf: it really is like finally meeting family.

Some memorable moments from Debian conferences?

During DC11, the late nights with the "corridor cabal" in the hotel, chatting about everything. A group expedition to watch shooting stars in the middle of nowhere, during DC13. And a very memorable videoteam session: it was my first time directing and everything that could go wrong, went wrong (including the speaker deciding to take a walk outside the room, to demonstrate something, out of the cameras range). It was a disaster, but also fun: at the end of it, all the video crew was literally in stitches. But there are many awesome moments, almost too many to recall. Each conference is precious on that regard: for me the socializing part is extremely important, it's what cements relationships and help remote work go smoothly, and gives you motivation to volunteer in tasks that sometimes are not exactly fun.

You are known as Front Desk member for DebConf's - what work does it occupy and why do you enjoy doing it?

I'm not really a member of the team: just one of Nattie's minions!

You had been also part of DebConf Video team - care to share insights

into video team work and benefits it provides to Debian Project?

The video team work is extremely important: it makes possible for people not attending to follow the conference, providing both live streaming and recording of all talks. I may be biased, but I think that DebConf video coverage and the high quality of the final recordings are unrivaled among FLOSS conferences - especially since it's all volunteer work and most of us aren't professional in the field. During the conference we take shifts in filming the various talks - for each talk we need approximately 4 volunteers: two camera operators, a sound mixer and the director. After the recording, comes the boring part: reviewing, cutting and sometimes editing the videos. It's a long process and during the conference, you can sometimes spot the videoteam members doing it at night in the hacklab, exhausted after a full day of filming. And then, the videos are finally ready to be uploaded, for your viewing pleasure. During the last years this process has become faster thanks to the commitment of many volunteers, so that now you have to wait only few days, sometimes a week, after the end of the conference to be able to watch the videos. I personally love to contribute to the videoteam: you get to play with all that awesome gear and you actually make a difference for all the people who cannot attend in person.

You are also non-packaging Debian Developer - how does that feel like?

Feels awesome! The mere fact that the Debian Project decided - in 2009 via a GR - to recognize the many volunteers who contribute without doing packaging work is a great show of inclusiveness, in my opinion. In a big project like Debian just packaging software is not enough: the final result relies heavily on translators, sysadmins, webmasters, publicity people, event organizers and volunteers, graphic artists, etc. It's only fair that these contributions are deemed as valuable as the packaging, and to give an official status to those people. I was one of the firsts non-uploading DD, four years ago, and for a long time it was just really an handful of us. In the last year I've seen many others applying for the role and that makes me really happy: it means that finally the contributors have realized that they deserve to be an official part of Debian and to have "citizenship rights" in the project.

You were the leading energy on Debian's diversity statement - what gave

you the energy to drive into it?

It seemed the logical conclusion of the extremely important work that Debian Women had done in the past. When I first joined Debian, in 2009, as a contributor, I was really surprised to find a friendly community and to not be discriminated on account of my gender or my lack of coding skills. I may have been just lucky, landing in particularly friendly teams, but my impression is that the project has been slowly but unequivocally changed by the work of Debian Women, who raised first the need for inclusiveness and the awareness about the gender problem in Debian. I don't remember exactly how I stumbled upon the fact that Debian didn't have a Diversity Statement, but at first I was very surprised by it. I asked zack (Stefano Zacchiroli), who was DPL at the time, and he encouraged me to start a public discussion about it, sending out a draft - and helped me all the way along the process. It took some back and forth in the debian-project mailing list, but the only thing needed was actually just someone to start the process and try to poke the discussion when it stalled - the main blocker was actually about the wording of the statement. I learned a great deal from that experience, and I think it changed completely my approach in things like online discussions and general communication within the project. At the end of the day, what I took from that is a deep respect for who participated and the realization that constructive criticism does require certainly a lot of work for all parts involved, but can happen. As for the statement in itself: these things are as good as you keep them alive with best practices, but I think that are better stated explicitly rather than being left unsaid.

You are involved also with another Front Desk, the Debian's one which is involved with Debian's New Members process - what are tasks of that FD

and how rewarding is the work on it?

The Debian Front Desk is the team that runs the New Members process: we receive the applications, we assign the applicant a manager, and we verify the final report. In the last years the workflow has been simplified a lot by the re-design of the nm.debian.org website, but it's important to keep things running smoothly so that applicants don't have too lenghty processes or to wait too much before being assigned a manager. I've been doing it for a less more than a month, but it's really satisfying to usher people toward DDship! So this is how I feel everytime I send a report over to DAM for an applicant to be accepted as new Debian Developer:

How do you see future of Debian development?

Difficult to say. What I can say is that I'm pretty sure that, whatever the technical direction we'll take, Debian will remain focused on excellence and freedom.

What are your future plans in Debian, what would you like to work on?

Definetely bug wrangling: it's one of the thing I do best and I've not had a chance to do that extensively for Debian yet.

Why should developers and users join Debian community? What makes Debian a great and happy place?

We are awesome, that's why. We are strongly committed to our Social Contract and to users freedom, we are steadily improving our communication style and trying to be as inclusive as possible. Most of the people I know in Debian are perfectionists and outright brilliant in what they do. Joining Debian means working hard on something you believe, identifying with a whole project, meeting lots of wonderful people and learning new things. It ca be at times frustrating and exhausting, but it's totally worth it.

You have been involved in Mozilla as part of OPW - care to share

insights into Mozilla, what have you done and compare it to Debian?

That has been a very good experience: it meant have the chance to peek into another community, learn about their tools and workflow and contribute in different ways. I was an intern for the Firefox QA team and their work span from setting up specific test and automated checks on the three version of Firefox (Stable, Aurora, Nightly) to general bug triaging. My main job was bug wrangling and I loved the fact that I was a sort of intermediary between developers and users, someone who spoke both languages and could help them work together. As for the comparison, Mozilla is surely more diverse than Debian: both in contributors and users. I'm not only talking demographic, here, but also what tools and systems are used, what kind of skills people have, etc. That meant reach some compromises with myself over little things: like having to install a proprietary tool used for the team meetings (and getting crazy in order to make it work with Debian) or communicating more on IRC than on mailing lists. But those are pretty much the challenges you have to face whenever you go out of your comfort zone .

You are also volunteer of the Organization for Transformative Works -

what is it, what work do you do and care to share some interesting stuff?

How do you see future of Debian development?

Difficult to say. What I can say is that I'm pretty sure that, whatever the technical direction we'll take, Debian will remain focused on excellence and freedom.

What are your future plans in Debian, what would you like to work on?

Definetely bug wrangling: it's one of the thing I do best and I've not had a chance to do that extensively for Debian yet.

Why should developers and users join Debian community? What makes Debian a great and happy place?

We are awesome, that's why. We are strongly committed to our Social Contract and to users freedom, we are steadily improving our communication style and trying to be as inclusive as possible. Most of the people I know in Debian are perfectionists and outright brilliant in what they do. Joining Debian means working hard on something you believe, identifying with a whole project, meeting lots of wonderful people and learning new things. It ca be at times frustrating and exhausting, but it's totally worth it.

You have been involved in Mozilla as part of OPW - care to share

insights into Mozilla, what have you done and compare it to Debian?

That has been a very good experience: it meant have the chance to peek into another community, learn about their tools and workflow and contribute in different ways. I was an intern for the Firefox QA team and their work span from setting up specific test and automated checks on the three version of Firefox (Stable, Aurora, Nightly) to general bug triaging. My main job was bug wrangling and I loved the fact that I was a sort of intermediary between developers and users, someone who spoke both languages and could help them work together. As for the comparison, Mozilla is surely more diverse than Debian: both in contributors and users. I'm not only talking demographic, here, but also what tools and systems are used, what kind of skills people have, etc. That meant reach some compromises with myself over little things: like having to install a proprietary tool used for the team meetings (and getting crazy in order to make it work with Debian) or communicating more on IRC than on mailing lists. But those are pretty much the challenges you have to face whenever you go out of your comfort zone .

You are also volunteer of the Organization for Transformative Works -

what is it, what work do you do and care to share some interesting stuff?

Recently the discussions around how to distribute third party applications for "Linux" has become a new topic of the hour and for a good reason - Linux is becoming mainstream outside of free software world. While having each distribution have a perfectly packaged, version-controlled and natively compiled version of each application installable from a per-distribution repository in a simple and fully secured manner is a great solution for popular free software applications, this model is slightly less ideal for less popular apps and for non-free software applications. In these scenarios the developers of the software would want to do the packaging into some form, distribute that to end-users (either directly or trough some other channels, such as app stores) and have just one version that would work on any Linux distribution and keep working for a long while.

For me the topic really hit at Debconf 14 where

Recently the discussions around how to distribute third party applications for "Linux" has become a new topic of the hour and for a good reason - Linux is becoming mainstream outside of free software world. While having each distribution have a perfectly packaged, version-controlled and natively compiled version of each application installable from a per-distribution repository in a simple and fully secured manner is a great solution for popular free software applications, this model is slightly less ideal for less popular apps and for non-free software applications. In these scenarios the developers of the software would want to do the packaging into some form, distribute that to end-users (either directly or trough some other channels, such as app stores) and have just one version that would work on any Linux distribution and keep working for a long while.

For me the topic really hit at Debconf 14 where

This is an essay form of a talk I have given today at the Cambridge

Mini-debconf. The talk was videoed, so will presumably show up in the

Debconf video archive eventually.

Abstract

Debian has a long and illustrious history. However, some of the things

we do perhaps no longer make as much sense as they used to do. It's a

new millennium, and we might find better ways of doing things. Things

that used to be difficult might now be easy, if we dare look at things

from a fresh perspective. I have been doing that at work, for the

Baserock project, and this talk is a compilation of observations based

on what I've learnt, concentrating on things that affect the

development workflow of package maintainers.

Introduction and background

I have been a Debian developer since August, 1996 (modulo a couple of

retirements), and have used it a little bit longer. I have done a

variety of things for Debian, from maintaining PGP 2 packages to

writing

This is an essay form of a talk I have given today at the Cambridge

Mini-debconf. The talk was videoed, so will presumably show up in the

Debconf video archive eventually.

Abstract

Debian has a long and illustrious history. However, some of the things

we do perhaps no longer make as much sense as they used to do. It's a

new millennium, and we might find better ways of doing things. Things

that used to be difficult might now be easy, if we dare look at things

from a fresh perspective. I have been doing that at work, for the

Baserock project, and this talk is a compilation of observations based

on what I've learnt, concentrating on things that affect the

development workflow of package maintainers.

Introduction and background

I have been a Debian developer since August, 1996 (modulo a couple of

retirements), and have used it a little bit longer. I have done a

variety of things for Debian, from maintaining PGP 2 packages to

writing  In the late 90s, google became popular for one reason: because they

had a no-nonsense frontpage that loaded quickly and didn't try to play

with your mind. Well, at least that was my motivation for switching. The

fact that they were using a revolutionary new search algorithm which

changed the way you search the web had nothing to do with it, but was a

nice extra.

Over the years, that small hand-written frontpage has morphed into

something else. A behind-the-scenes look at the page shows that it's no

longer the hand-written simple form of old, but something horrible that

went through a minifier (read: obfuscator). Even so, a quick check

against the

In the late 90s, google became popular for one reason: because they

had a no-nonsense frontpage that loaded quickly and didn't try to play

with your mind. Well, at least that was my motivation for switching. The

fact that they were using a revolutionary new search algorithm which

changed the way you search the web had nothing to do with it, but was a

nice extra.

Over the years, that small hand-written frontpage has morphed into

something else. A behind-the-scenes look at the page shows that it's no

longer the hand-written simple form of old, but something horrible that

went through a minifier (read: obfuscator). Even so, a quick check

against the  thanks to the

thanks to the  My problem was: I had a number of databases generated in different machines and I wanted to query them as if they were one, using the database the data came from as a field while querying and while showing results. The databases are SQLite3 files, generated using SQLAlchemy in a Python program.

I solved this by using SQLAlchemy, which was good because I could use the same ORM mapping that the program used. I noticed that the

My problem was: I had a number of databases generated in different machines and I wanted to query them as if they were one, using the database the data came from as a field while querying and while showing results. The databases are SQLite3 files, generated using SQLAlchemy in a Python program.

I solved this by using SQLAlchemy, which was good because I could use the same ORM mapping that the program used. I noticed that the  The

The  Compute clusters for Debian development and building final report

Summary

Compute clusters for Debian development and building final report

Summary